This is an old revision of the document!

SHIFT-WIKI - Sjoerd Hooft's InFormation Technology

This WIKI is my personal documentation blog. Please enjoy it and feel free to reach out through blue sky if you have a question, remark, improvement or observation.

Use Azure Bastion to RDP over SSH

Summary: This wiki page shows how to use Azure Bastion to RDP to Azure VMs over SSH.

Date: 8 February 2026

This page is a follow-up on Using VS Code and Lens to connect to a private AKS cluster in which I explain on how to use a bastion and a jumpbox VM to connect to a private AKS cluster. Now, the last step there is to use the azure CLI to create a ssh tunnel, over which Lens can connect to the cluster. But what if you also have some Windows VMs in that same network? In that case, it's quite easy to extend the existing ssh tunnel so that you can also RDP to those VMs over the same tunnel.

Recap of What we Already Have

Basically, what we already have is the following commands. We login to Azure, set the subscription and then create a ssh tunnel to the jumpbox VM through the bastion:

# Login to Azure az config set core.login_experience_v2=off az config set core.enable_broker_on_windows=false az login --tenant 7e4an71d-a123-a123-a123-abcd12345678 # Set the subscription to the bastion subscription az account set --subscription aa123456-a123-a123-a123-abcd12345678 # Create a ssh tunnel to the jumpbox through the bastion az network bastion ssh --name bas-001 --resource-group "rg-bastion" --target-resource-id /subscriptions/aa123456-a123-a123-a123-abcd12345678/resourceGroups/rg-cluster/providers/Microsoft.Compute/virtualMachines/vm-jumpbox --auth-type AAD -- -L 6443:aks-privatecluster-m8k6low2.privatelink.westeurope.azmk8s.io:443

Extend the SSH Tunnel for RDP

Now, to also use the same ssh tunnel for RDP towards some of the VMs we have in Azure, we can simply add some additional port forwarding rules to the ssh tunnel command. In th example below, I added additional port forwarding rules for 2 VMs. For easy reference, I also added some Write-Host statements to show which port corresponds to which VM:

"Write-Host 'Start ssh client tunnel to Jumpbox (vm-jumpbox)' -ForegroundColor Red;", "Write-Host ' 6443:aks-euw-vtx-prd-privatecluster' -ForegroundColor Red;", "Write-Host ' vm-app1.getshifting.local: mstsc /v:127.0.0.1:19001' -ForegroundColor Red;", "Write-Host ' vm-app2.getshifting.local: mstsc /v:127.0.0.1:19002' -ForegroundColor Red;", "az network bastion ssh --name bas-001 --resource-group rg-bastion --target-resource-id /subscriptions/aa123456-a123-a123-a123-abcd12345678/resourceGroups/rg-cluster/providers/Microsoft.Compute/virtualMachines/vm-jumpbox --auth-type AAD -- -L 6443:aks-privatecluster-m8k6low2.privatelink.westeurope.azmk8s.io:443:443 -L 19001:vm-app1.getshifting.local:3389 -L 19002:vm-app2.getshifting.local:3389;"

You can now use RDP towards the VMs using an alternate localhost port, for example mstsc /v:127.0.0.1:19001 for vm-app1 and mstsc /v:127.0.0.1:19002 for vm-app2.

This wiki has been made possible by:

Start Managing an Argo CD Application with Argo CD Itself

Summary: This wiki page shows how to manage an Argo CD application with Argo CD itself.

Date: 8 February 2026

When you start with Argo CD, you typically start simple, with just a application that you manage yourself. However, when you build up your knowledge and confidence, there comes a time where you no longer want to manage your Argo CD application manually, but instead want to manage it with Argo CD itself. On this page, I'll show you how to do just that. For this wiki page I'm assuming you already have an Argo CD application up and running, and also already have created a manifest which controls your Argo CD application. It should do exactly the same as the application you already have. The steps below will show you the steps on how to move from a manually managed Argo CD application to an Argo CD application that is managed by Argo CD itself and is part of the repository that is part of the GitOps flow.

Steps to Manage an Argo CD Application with Argo CD Itself

The most important aspect in this process is to make sure your actions do not interfere with the other kuberenets resources that are managed by Argo CD. As we have to delete the existing applications (as it was created manually), we have to make sure all other resources will be nontouched. To quote from the Argo CD documentation:

Apps can be deleted with or without a cascade option. A cascade delete, deletes both the app and its resources, rather than only the app.

So, to make sure we do not touch the existing resources, we must make sure to delete without the cascade. To do so, we will remove any finalizers from the application, if they exist, and then delete the application. Let's first take a good look at the application:

# Check for available argocd apps kubectl get app -A # Describe the app so we can check for metadata.finalizers kubectl describe app argoapp1 > argoapp1.yaml

We have now exported the application to a yaml file, so we can check for the finalizers. Open the yaml file in yoyr favorite editor and check the metadata section for finalizers. If they exist, remove them like this:

# On linux kubectl patch app argoapp1 -p '{"metadata": {"finalizers": null}}' --type merge # On windows, use escapes for the double quotes kubectl patch app vtxops -p '{\"metadata\": {\"finalizers\": null}}' --type merge

If you're not sure a finalizer exists, running the command below will not cause any harm, and will show you an output like application.argoproj.io/argoapp1 patched (no change).

Now that we have removed the finalizers, we can safely delete the application:

kubectl delete app argoapp1

Sometimes, you might also want to start managing the Argo CD projects. For this, you can follow the same procedure, but make sure to replace the resource type from app to appproject in the commands above.

Apply the Manifest

Note that the application is now removed from Argo CD, so there is one more step we must take. We now have to manually apply the manifest that is already part of the GitOps repository, so that Argo CD can pick it up and start managing it's own applications:

kubectl apply -f argocd-apps.yaml

This wiki has been made possible by:

Creating a Helm Chart for Azure SecretProvider

Summary: This wiki page shows how to create a helm chart for the Azure Secretprovider.

Date: 8 February 2026

This is the wiki page on how the helm chart azure-secretprovider was created. The helm chart is used to create a SecretProviderClass manifest for AKS with workload identity enabled. After creating the chart and some example values file, I'll also show how to create a helm repository in Azure and how to publish the chart there. Finally, I'll also show an overview of how a secretprovider actually works in AKS with workload identity enabled.

Create a Helm Chart

Creating a Helm chart is very straightforward. If you already have a working environment with helm installed, you can simply run the following command to create a new chart:

helm create azure-secretprovider

This command will generate a directory structure for your Helm chart with some default files and folders. After that, and when looking at the documentation, the next step would be to remove all of the files and add your own. Now, creating a helm chart is very easy because all you need to do is adding a manifest file in the `templates` folder. That's all. Whenever you then do a helm install, it will just install that manifest. Of course, you can also use values, variables and more, which is what we'll do.

I used the following steps to create the chart, using the WSL installation I described earlier:

helm version

# Output: version.BuildInfo{Version:"v3.16.1", GitCommit:"5a5449dc42be07001fd5771d56429132984ab3ab", GitTreeState:"clean", GoVersion:"go1.22.7"}

helm create azure-secretprovider

A new folder is created called `azure-secretprovider`. After checking the files, I performed the following steps:

- Removed the empty directory `charts`

- Removed the directory `templates/tests`

- Updated the Chart.yaml file with relevant information about the chart

- From the `templates` folder, removed all files except for `_helpers.tpl` and `NOTES.txt`

- Updated the `NOTES.txt` file with a link to this wiki page

- Created a new file `secretproviderclass.yaml` in the `templates` folder

- Created a template for the SecretProviderClass manifest, using values from `values.yaml`

- Removed the content of the default `values.yaml` file and updated it with relevant parameters for the SecretProviderClass

To check the helm chart, see my github repository.

Validate the Helm Chart

First, we can validate the chart using helm lint:

$ helm lint ./azure-secretprovider ==> Linting ./azure-secretprovider 1 chart(s) linted, 0 chart(s) failed

Next, we can generate an empty template using helm template:

$ helm template azure-secretprovider ./azure-secretprovider --- # Source: azure-secretprovider/templates/secretproviderclass.yaml apiVersion: secrets-store.csi.x-k8s.io/v1 kind: SecretProviderClass metadata: name: azure-secretprovider spec: provider: azure parameters: keyvaultName: objects: | array: tenantId: usePodIdentity: "false" clientID:

So far, everything looks good. Now, let's create some values files.

Values Files

I have created various values files for different use cases.

Grafana

The following values file creates two secrets, one for the Grafana admin credentials and one for the Grafana Slack Webhook. The first secret has two keys, while the second secret has one key:

tenantId: 7e4an71d-a123-a123-a123-abcd12345678 clientID: acc78f4a-932a-415f-a77e-e7e1071a0161 keyvaultName: kv-euw-shift secrets: - Grafana-AdminPassword - Grafana-AdminUsername - Grafana-SlackWebHook secretObjects: - secretName: grafana-admin data: - key: admin-password objectName: Grafana-AdminPassword - key: admin-user objectName: Grafana-AdminUsername type: Opaque - secretName: grafana-slackwebhook data: - key: SLACKWEBHOOK objectName: Grafana-SlackWebHook type: Opaque

If we would use this values file, we would render the following manifest:

$ helm template azure-secretprovider ./azure-secretprovider --values ./values/azure-secretprovider-grafana.yaml --- # Source: azure-secretprovider/templates/secretproviderclass.yaml apiVersion: secrets-store.csi.x-k8s.io/v1 kind: SecretProviderClass metadata: name: azure-secretprovider spec: provider: azure parameters: keyvaultName: kv-euw-shift objects: | array: - | objectName: Grafana-AdminPassword objectType: secret - | objectName: Grafana-AdminUsername objectType: secret - | objectName: Grafana-SlackWebHook objectType: secret tenantId: 7e4an71d-a123-a123-a123-abcd12345678 usePodIdentity: "false" clientID: acc78f4a-932a-415f-a77e-e7e1071a0161 secretObjects: - data: - key: admin-password objectName: Grafana-AdminPassword - key: admin-user objectName: Grafana-AdminUsername secretName: grafana-admin type: Opaque - data: - key: SLACKWEBHOOK objectName: Grafana-SlackWebHook secretName: grafana-slackwebhook type: Opaque

If needed, we could override one of the settings in the values file using --set: $ helm template azure-secretprovider ./azure-secretprovider --values ./values/azure-secretprovider-grafana.yaml --set keyvaultName=kv-euw-shift-dev

Certificate

The following values file creates a secret for a certificate:

secrets: - getshifting-tls-cert - getshifting-tls-key secretObjects: - secretName: getshifting-tls data: - key: tls.crt objectName: getshifting-tls-cert - key: tls.key objectName: getshifting-tls-key type: kubernetes.io/tls

Creating a Helm Repository in Azure

To create a helm repository in Azure, we can use Azure Container Registry (ACR). ACR supports hosting helm charts, so we can push our chart to ACR and then use it as a helm repository. To create the repositor, we can go to Azure Cloud Shell and run the following commands:

# Variables $project = "acrshift" $rg = "rg-$project" $loc = "westeurope" # Create resource group az group create ` --name $rg ` --location $loc # Create Azure Container Registry az acr create ` --name "$project" ` --resource-group $rg ` --sku Basic ` --location $loc ` --public-network-enabled true

Push the Helm Chart to ACR

Now that we have created an Azure Container Registry, we can package our helm chart and push it to the ACR. Let's first package the chart:

helm package ./azure-secretprovider

Note that in the output of the command, you will see the path to the generated .tgz file. This is the file that we will push to ACR.

Next, we can push the chart to ACR. To do so, let's login to the ACR helm repository, amd then push the chart:

az login # Make sure you're logged in to the correct subscription az account set --subscription "30b3c71d-a123-a123-a123-abcd12345678" # Login to ACR helm repository USER_NAME="00000000-0000-0000-0000-000000000000" ACR_NAME="acrshift" PASSWORD=$(az acr login --name "$ACR_NAME" --expose-token --output tsv --query accessToken --only-show-errors) echo "$PASSWORD" | helm registry login "$ACR_NAME.azurecr.io" --username $USER_NAME --password-stdin # Push the chart to ACR helm push azure-secretprovider-0.1.0.tgz oci://"$ACR_NAME".azurecr.io/helm

Using the Helm Chart from ACR

Once the chart is pushed to the ACR, we can check the ACR for existing charts and view their manifests:

# List the helm charts in the ACR $ az acr repository list --name $ACR_NAME [ "helm/azure-secretprovider" ] # Show the details of the helm chart in the ACR $ az acr repository show --name $ACR_NAME --repository helm/azure-secretprovider { "changeableAttributes": { "deleteEnabled": true, "listEnabled": true, "readEnabled": true, "writeEnabled": true }, "createdTime": "2026-02-06T16:32:05.1518971Z", "imageName": "helm/azure-secretprovider", "lastUpdateTime": "2026-02-06T16:32:05.3101879Z", "manifestCount": 1, "registry": "acrshift.azurecr.io", "tagCount": 1 }

To use the helm chart from ACR, we can add the ACR helm repository to our local helm client, and then install the chart from there:

# Add ACR helm repository helm repo add "$ACR_NAME" oci://"$ACR_NAME".azurecr.io/helm # Update helm repositories helm repo update # Install the chart from ACR using a local values file $ helm template azure-secretprovider oci://$ACR_NAME.azurecr.io/helm/azure-secretprovider --values ./values/azure-secretprovider-grafana.yaml Pulled: acrshift.azurecr.io/helm/azure-secretprovider:0.1.0 Digest: sha256:e10671232d69e5da0d662fd2c7bacfb621caf04a100d545334bf96b045d5315c --- # Source: azure-secretprovider/templates/secretproviderclass.yaml apiVersion: secrets-store.csi.x-k8s.io/v1 kind: SecretProviderClass metadata: name: azure-secretprovider spec: provider: azure parameters: keyvaultName: kv-euw-shift objects: | array: - | objectName: Grafana-AdminPassword objectType: secret - | objectName: Grafana-AdminUsername objectType: secret - | objectName: Grafana-SlackWebHook objectType: secret tenantId: 7e4an71d-a123-a123-a123-abcd12345678 usePodIdentity: "false" clientID: acc78f4a-932a-415f-a77e-e7e1071a0161 secretObjects: - data: - key: admin-password objectName: Grafana-AdminPassword - key: admin-user objectName: Grafana-AdminUsername secretName: grafana-admin type: Opaque - data: - key: SLACKWEBHOOK objectName: Grafana-SlackWebHook secretName: grafana-slackwebhook type: Opaque

As you can see from the 'Pulled' output, the chart is now pulled from the Azure Container Registry, and then together with the values file successfully rendered.

How does the SecretProviderClass work in AKS with Workload Identity Enabled

Now that we have created a helm chart for creating an Azure Secret Provider targeted on AKS with workload identity enabled, I also want to briefly show how the secretprovider works. Because of the workload identity and the central role of a secret provider, I created a diagram to show how the secretprovider works in AKS with workload identity enabled:

As you may note, the overview is for a secretprovider for grafana. This allowed for something extra, because in this setup grafana is also configured to use workload identity to access Azure monitor.

Note that the helm chart for Grafana is listed as a mix of the helm chart and the rendered manifest. This way it shows exactly what values are important for this setup.

Troubleshooting the SecretProviderClass

When troubleshooting the SecretProviderClass, there are a few steps that you should usually take to find the cause. First, you need to find the node where the pod is running that wants to use the SecretProviderClass. Next, you need the logs of the aks-secrets-store-csi-driver pod that is running on that node. In the below example, we'll use grafana again:

# Get the node where the pod is running that wants to use the SecretProviderClass kubectl get pod <pod-name> -o wide | sls grafana # From the output, get all the pods running on the same node kubectl get pods -o wide | sls <node-name> # Get the logs of the aks-secrets-store-csi-driver pod that is running on that node kubectl logs <aks-secrets-store-csi-driver-pod-name> -n kube-system

Note that these commands are done on powershellm with sls (Select-String) to filter the output. You can also do this in bash with grep.

Below we'll handle some of the errors I've encountered over time.

Secrets namespace/secrets-store-creds not found

The error:

E0901 07:24:28.764891 1 reconciler.go:237] "failed to reconcile spc for pod" err="failed to get node publish secret namespace/secrets-store-creds, err: secrets \"namespace/secrets-store-creds\" not found" spc="vertex-secretprovider-grafana" pod="grafana-7fc7fb9fb4-f97hc" controller="rotation" E0901 07:24:28.764944 1 reconciler.go:365] "nodePublishSecretRef not found. If the secret with name exists in namespace, label the secret by running 'kubectl label secret secrets-store-creds secrets-store.csi.k8s.io/used=true -n namespace" err="secrets \"namespace/secrets-store-creds\" not found" name="secrets-store-creds" namespace="namespace"

This error is encountered when you missed one of the steps in the Secrets Store CSI Driver configuration. You can solve the error by labeling the secrets-store-creds secrets so the CSI driver knows it can use it:

kubectl label secret secrets-store-creds secrets-store.csi.k8s.io/used=true -n namespace

Failed to patch secret data

The error:

E0105 23:01:36.680891 1 reconciler.go:497] "failed to patch secret data" err="Secret \"grafana-admin\" not found" secret="namespace/grafana-admin" spc="namespace/vertex-secretprovider-grafana" controller="rotation"

This error happens when the secret that the SecretProviderClass is trying to create already exists, for example when it was manually created before. You could either delete the existing secret, or label the secret just as before:

kubectl label secret grafana-admin secrets-store.csi.k8s.io/managed=true -n nemspace

Useful Links

This wiki has been made possible by:

Configure Request Header Forwarding in AWS Application Load Balancer and Apache

Summary: This wiki page shows how to configure both AWS Application Load Balancer and Apache to forward the request header to a backend container.

Date: 30 January 2026

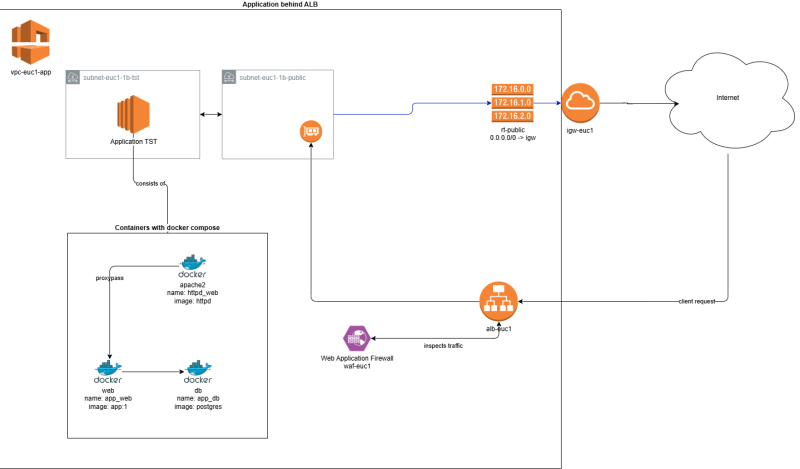

To start, this is the setup we're talking about:

As you can see, the application load balancer receives the request from clients and needs to forward the client IP address to the apache container, which then forwards it to the backend application container. To achieve this, we need to set the “X-Forwarded-For” header in both the load balancer and apache configurations.

AWS Application Load Balancer Configuration

The AWS Application Load Balancer is the easiest part in this story. The load balancer has three options for handling the X-Forwarded-For header:

1. Append (default): If the value is append, the Application Load Balancer adds the client IP address (of the last hop) to the X-Forwarded-For header in the HTTP request before it sends it to targets. 1. Preserve: If the value is preserve, the Application Load Balancer preserves the X-Forwarded-For header in the HTTP request, and sends it to targets without any change. 1. Remove: If the value is remove, the Application Load Balancer removes the X-Forwarded-For header in the HTTP request before it sends it to targets.

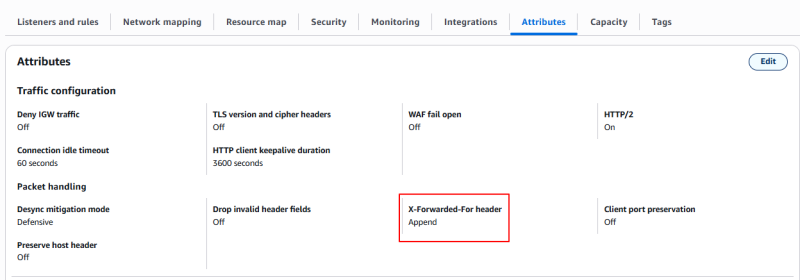

For us, the append option will work best, and it should be the default. Quickly checking the settings confirms this:

Apache Configuration

The apache requestheaders are a bit more work. Again, there are several options to choose from:

1. set: The request header is set, replacing any previous header with this name 1. append: The request header is appended to any existing header of the same name. When a new value is merged onto an existing header it is separated from the existing header with a comma. This is the HTTP standard way of giving a header multiple values. 1. add: The request header is added to the existing set of headers, even if this header already exists. This can result in two (or more) headers having the same name. This can lead to unforeseen consequences, and in general append should be used instead. 1. unset: The request header of this name is removed, if it exists. If there are multiple headers of the same name, all will be removed.

Again, the append option will work best for us. Besides that, we also need to enable early processing to ensure the header is added before other modules process the request. To configure this, we find the section in our httpd-sites-enable file (app-ssl.conf) and locate the proxy pass section. We then add the request header line as follows:

ProxyRequests Off

<Proxy *>

Order deny,allow

Allow from all

</Proxy>

ProxyVia On

ProxyErrorOverride On

ServerName getshifting.com

DocumentRoot /usr/local/apache2/htdocs

ErrorDocument 503 /custom_50x.html

ErrorDocument 403 /custom_50x.html

ErrorDocument 404 /custom_50x.html

ProxyPass /custom_50x.html !

ProxyPass /longpolling/ http://app_web:8080/longpolling/

ProxyPassReverse /longpolling/ http://app_web:8080/longpolling/

ProxyPass / http://app_web:8081/

ProxyPassReverse / http://app_web:8081/

RequestHeader append X-Forwarded-Proto "https" early

SetEnv proxy-nokeepalive 1

RewriteEngine on

Testing the Configuration

To test the configuration, the easiest way is to add the client source IP address to the apache logs. We locate the apache config file (httpd.conf) and update the log format as follows:

#LogFormat "%h %l %u %t \"%r\" %>s %b" common LogFormat "%h %{X-Forwarded-For}i %l %u %t \"%r\" %>s %b" common

Restart the Apche container

I prefer starting the whole stack, so I know everything works as expected:

cd /data/app/docker docker-compose -f docker-compose.yml down docker-compose -f docker-compose.yml up -d docker-compose logs -f docker logs --follow --timestamps httpd_web

Useful Links

This wiki has been made possible by: